Since around the year 2000, LCD (liquid-crystal display) and later LED (light-emitting display) monitors gradually became the de facto standard replacing the earlier CRT (cathode ray tube) technology available. Over the years the size and resolutions of the monitors have increased significantly. These days when selecting one to buy it’s really just about choosing the desired screen size and whether it’s capable of 1080p or 4K resolution. No consideration for colour depth is required for instance.

Since around the year 2000, LCD (liquid-crystal display) and later LED (light-emitting display) monitors gradually became the de facto standard replacing the earlier CRT (cathode ray tube) technology available. Over the years the size and resolutions of the monitors have increased significantly. These days when selecting one to buy it’s really just about choosing the desired screen size and whether it’s capable of 1080p or 4K resolution. No consideration for colour depth is required for instance.

Of course, during the 1980s and 1990s this wasn’t the case. A series of acronyms were used to give an indication of the monitor’s capabilities, and were frequently stated. Some of these acronyms were EGA and VGA. Over a number of years now low-end or built-in video adapters in motherboards, have no problem with displaying millions of colours on a 1920 x 1080 resolution screen. However, in the past it was more pertinent to ensure that the video adapter was capable enough to handle the monitor’s capabilities or vice versa.

In this post, I’ll be covering from the CGA and MDA standards of the early 1980s through to XGA in the 1990s used by IBM and compatible PCs. Before closing out, they’ll be mention of some of the other standards that became available. PCem is used for many of the screenshots due to the lack of physical hardware.

MDA (Monochrome Display Adapter)

MDA dates back to the original IBM PC from 1981 and as the names suggests did not support colour output. Using a Motorola 6845 chip with 4 KB of video memory that supported only 80 columns by 25 rows of text at 720 x 350 resolution, it was only really suitable for spreadsheets and word processing. A severe limitation was that it couldn’t address to individual pixels, and therefore restricted to displaying an entire character in text mode. This relied on the ASCII (American Standard Code for Information Interchange) character set known as Code Page 437.

Code Page 437 character set.

Due to this, the closest to showing graphics on the screen was to get creative with the ASCII characters. The vast majority of games and Windows 1.0 when it was released in 1985, had no support for MDA.

The original MDA card manufactured by IBM also included a parallel printer port, and given that MDA rendered text sharper than CGA, both of those features proved to be a more popular option for businesses at the time. Other clones were likely to only have a single video port.

MDA was best suited to text based DOS applications as shown here using PCem.

What connected to a MDA card via a DE-9 connector would have usually been a monochrome monitor such as IBM’s 5151 that was shipped with the original IBM PC. The display in this case would appear all green, thanks to the use of P39 phosphor illumination. Other colours to be seen where white or amber depending on the monitor.

How the text appeared on actual hardware.

CGA (Colour Graphics Adapter)

Released within the same year after MDA for the IBM PC was CGA. The original IBM CGA card had 16 KB of video memory, supported at most 640 x 200 resolution, and have 4-bit colour (16 colours) available . In order to utilise the full 16-colour palette, the resolution was limited to just 160 x 100 though only in text mode. Using a 4-colour palette, the resolution could increase up to 320 x 200, and the maximum resolution could use only 2 colours. The ability to support bitmapped graphics and obviously colour were its main advantages over the MDA, though quality of text was compromised appearing more jagged on screen.

MS-DOS Editor this time in CGA mode. Visually more appealing though at a lower resolution.

CGA proved to be much more popular for home use and over the last half of the decade became an affordable option when newer standards came about. Officially Windows up to version 3.0 was supported, though the CGA video driver from 3.0 could work with 3.1.

Notice below that as Windows 3.0 was running at 640 x 200 resolution, only black and white is used. The colour palette in Paintbrush for instance only had dithered patterns available.

Windows 3.0 in CGA.

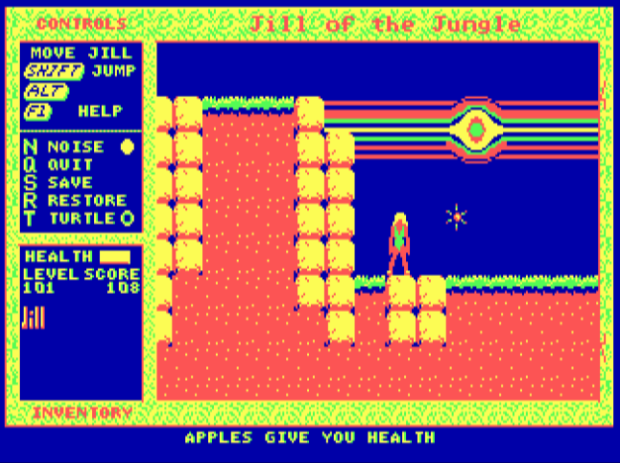

A game that I played in my child hood, Jill of the Jungle was one of the many games from the era that supported CGA.

Jill of the Jungle in CGA using a 4-colour palette.

When it came to monitors plenty of models became available during the 1980s, though the one that started it all was the IBM 5153 released in 1983. Although CGA had already existed for two years by that stage, the initial expectation was that it would be plugged into an analogue TV set using a radio frequency modulator.

EGA (Enhanced Graphics Adapter)

Released in 1984, EGA was a significant improvement over CGA albeit an expensive option at the time. It was capable of displaying 6-bit colour (comprising of a 64-colour palette) though only 16 colours could be used at once at up to 640 x 350 resolution. The default palette would comprise of all the colours available from CGA, though would allow the palette to be substituted to the other colours available with EGA.

IBM’s EGA card would have 64 KB of memory though could be expanded to 128 KB using a daughter board. Third-party cards later became available with up to 256 KB of memory. Many videos cards would support a CGA monitor via the use of a switch to ensure compatibility with colours and text modes. The DE-9 connector was still used though pins 2, 6, and 7 had changed since CGA.

Windows 3.0 shown below sees a major improvement in useability over CGA noting the extra clarity of icons and text.

Windows 3.0 in EGA.

Returning to Jill of the Jungle, using EGA the game is instantly more playable. Moving objects are more clearly visible and the levels show some texture in appearance.

Jill of the Jungle in EGA using a 16-colour palette.

IBM released the 5154 EGA monitor in 1984 coinciding with the video card with both available for their new PC/AT model computer. Much of the software made between 1986 to 1991 could support EGA.

VGA (Video Graphics Array)

IBM went and created another video standard known as VGA in 1987 introduced into their PS/2 line of computers. It was one of several new standards incorporated into the PS/2 that spread across the entire PC industry. Even 30 years later in 2017, it’s still considered as the most basic form of video output for most PCs. Over time the VGA acronym became standard terminology for referring to the DE-15 connector used by compatible monitors and projectors. This connector to an extent is on its way out albeit slowly with motherboard manufacturers excluding it in some of their models. In 2015 Intel dropped VGA support altogether in their Skylake microarchitecture.

If you’re wondering why the name change to array from adapter, it was due to the use of a single chip whereas the earlier MDA, CGA, and EGA standards hosted a number of chips for the same function. This meant for the first time it was practical to place VGA chips directly onto the motherboard negating the need for a separate video card. For those dedicated VGA cards, the physical size of the card could now be greatly reduced. The earliest of video cards would come with 256 KB of memory, with an influx manufactured by various vendors that saw widespread adoption.

Probably thanks to Windows 3.0 and beyond, VGA is usually associated to using 640 x 480 resolution with 16 colours. It was however compatible with EGA’s 640 x 350 graphics mode, and supported up to 256 colours at 320 x 200 resolution. While still at a very low resolution, it was a major uplift from only having 4 colours with CGA.

Windows 3.0 didn’t improve quite as dramatic as when changing from CGA to EGA, though the text styling is more refined, and generally better proportioned as it appeared vertically stretched on the EGA display.

Windows 3.0 in VGA.

Thanks to running at 320 x 200 resolution, Jill of the Jungle was at its best. Even if you completed the game on CGA, the improvement was so good that you just needed to play from the start again to enjoy the visuals.

Jill of the Jungle using a 256-colour palette.

By the late 1980s a reasonable range of VGA monitors were available from the likes of IBM, NEC, Mitsubishi Electronics, and Magnavox among others. In a February 1988 issue of InfoWorld magazine, IBM had a range of monitors at various price points. The entry-level IBM 8503, a 12″ monochrome monitor was sold for $250 US. At the top end there was the IBM 8514 for $1,550 US, not to be confused with the 8514/A video standard released at the same time. In between there was also the IBM 8512 and 8513, both colour, that were 14″ and 12″ in size respectively.

SVGA (Super Video Graphics Array)

Normally said as Super VGA it was released not long after VGA, although wasn’t an official standard as such by IBM. It was defined originally by VESA (Video Electronics Standards Association) to combat against the inconsistencies between manufacturers claiming to support SVGA in their products. At first it was defined as being capable of displaying at 800 x 600 resolution with 4-bit pixels, meaning that each pixel could be any of 16 colours. Later this included 1,024 x 768 resolution with 8-bit pixels and beyond. Simply if it was an enhancement over VGA, then it would be considered SVGA.

Having a SVGA monitor and the right video card allowed a number of configurations.

SVGA and also VGA monitors for that matter used analogue frequencies so in practise relied heavily on what the video card could support. The video card converts the digital values to the specific frequency to display the required colour. This meant a SVGA monitor may only show 256 (8-bit) colours on one system, but could show up to 16.7 million (24-bit) colours on another based on the capabilities of the video card in another system using the same monitor. 32-bit colour was also frequently available, however this has the same amount as 24-bit but with the remaining 8 bits dedicated for transparency and gradient effects. Video cards for SVGA would start at 512 KB of memory and go up to multiple megabytes over the years.

Windows 95 provided generic support for various SVGA resolutions available at the time.

Windows 95 was the first version to be able to specify the model of monitor being used. Generic SVGA monitors could be selected as shown here. The resolution stated was for the highest resolution the monitor was capable of which typically was associated to the size of the CRT. For example, a 14″ CRT monitor at the time would be usually limited to 1,024 x 768 resolution, however if you were to own a 17″ monitor you were likely able to use 1,280 x 1,024 resolution provided the video card had enough memory.

Below is a table showing what a typical 1990s monitor could support before consideration of the video card. This isn’t entirely explicit however as the occasional high-end monitor may have supported the next step up in resolution when most of the same size won’t. Unlike LCD monitors where the specified size is exactly the viewable area of the screen, CRT loses as much as about an inch that’s viewable partly thanks to the plastic bezel. Regardless of the CRT monitor, the highest resolution it can support doesn’t necessarily mean it’s the most optimal unlike modern displays. A 14″ CRT was typically best at 640 x 480 resolution, though 800 x 600 was still acceptable for many people. Whereas a 17″ monitor was often best at 1,024 x 768. Monitors were not manufactured equally however, so some of the better quality monitors such as the Sony Trinitrons were fine at higher resolutions without causing too much eye strain.

Monitor size vs. resolution.

The following table specifies the colour palette supported depending on the screen resolution and amount of memory available for video.

Screen resolution vs. memory size and colour palette.

XGA (Extended Graphics Array)

The last true standard by IBM released in 1990, it wasn’t a direct replacement of SVGA but more specific to a subset of the functionality found with SVGA. The true predecessor was their 8514/A standard from 1987. Some of the later PS/2 computer models were manufactured to officially support XGA.

As a generalisation, it was frequently considered that XGA’s resolution is 1,024 x 768 with 256 colours compared to SVGA’s 800 x 600, though in reality is rather ambiguous as SVGA was capable of XGA’s resolution and higher.

XGA for the most part didn’t really take off as a widely used acronym and perhaps lazily the monitors were frequently referred to as being SVGA anyway.

With the exception of SVGA, so far these were official standards developed by IBM who reigned supreme during 1980s computing. There were indeed some others though I personally didn’t get first hand experience with these, nor did I know anyone who had one of the following.

Hercules

In 1982 a company was formed named Hercules Computer Technology Inc. in Hercules, just north of San Francisco by two people, Van Suwannukul and Kevin Jenkins. So much for thinking they were inspired by Ancient Rome! Released in the same year was the Hercules Graphics Card, and became a competing standard against IBM. This video card was more aligned with the MDA standard, though could allow bitmapped graphics be displayed on a monochrome monitor via the use of drivers. Later cards supported colour, and many clones were manufactured throughout the 1980s.

8514/A

Yet again another standard from IBM released in 1987. It had no where near the same amount of cloned video cards as VGA did. IBM’s 8514/A video cards were designed for another standard of theirs also released for their PS/2 computers, the MCA bus. MCA was a competitor to the ISA bus, though it wasn’t supported much by the wider IT industry. Many of the cloned video cards were altered for ISA. The 8514/A could support up to 1,024 x 768 resolution with 256 colours. Whereas other standards such as EGA and VGA relied more on the CPU to figure out what to display, the CPU in this case would send a command to the video card instead to process. IBM released a monitor named the 8514 to compliment the use of this video card.

There were other obscure standards such as PGA (Professional Graphics Controller), though unless your job involved CAD during the 1980s, people were unlikely to come across one.

What’s listed here is what you’re likely to come across from using PCem or working with computers of this vintage.